Teach About AI Without Using It? Here's What I Mean

When I started to focus on what durable AI education curricula might look like, I knew I was on a different railroad track than everyone else.

Most were and still are focused on how to use AI products, and on getting their heads around what it might mean. Many are still more emotional than rational about it.

I was looking past that. Oh, I try to help the introductory AI conversations, and conduct some of that training and coaching myself. But then what? Is all of AI training going to be to learn a prompt technique or be able to recite an ethical stance?

It better not be, but I think there’s a big danger that it will. The tendency I see is to throw hands up and point to some giant durable skill that schooling has long wanted to instill. “The rest is critical thinking, creativity, collaboration…that we’re already focused on.”

But school learning objectives need to be more strategic than that. I think the big issue is people don’t think there are AI principles that don’t immediately become specialist computer science stuff beyond the typical educator’s training. That’s not true at all. There’s a big set of key knowledge and meta-knowledge regarding AI that few have been exposed to, including in Computer Science instruction. And yet, it’s easily digestible by any adult—no fancy math required. Educators are especially qualified, since things like pedagogy/androgogy have direct conceptual parallels with teaching AI (which by the way is what we’re doing every time we interact with it.)

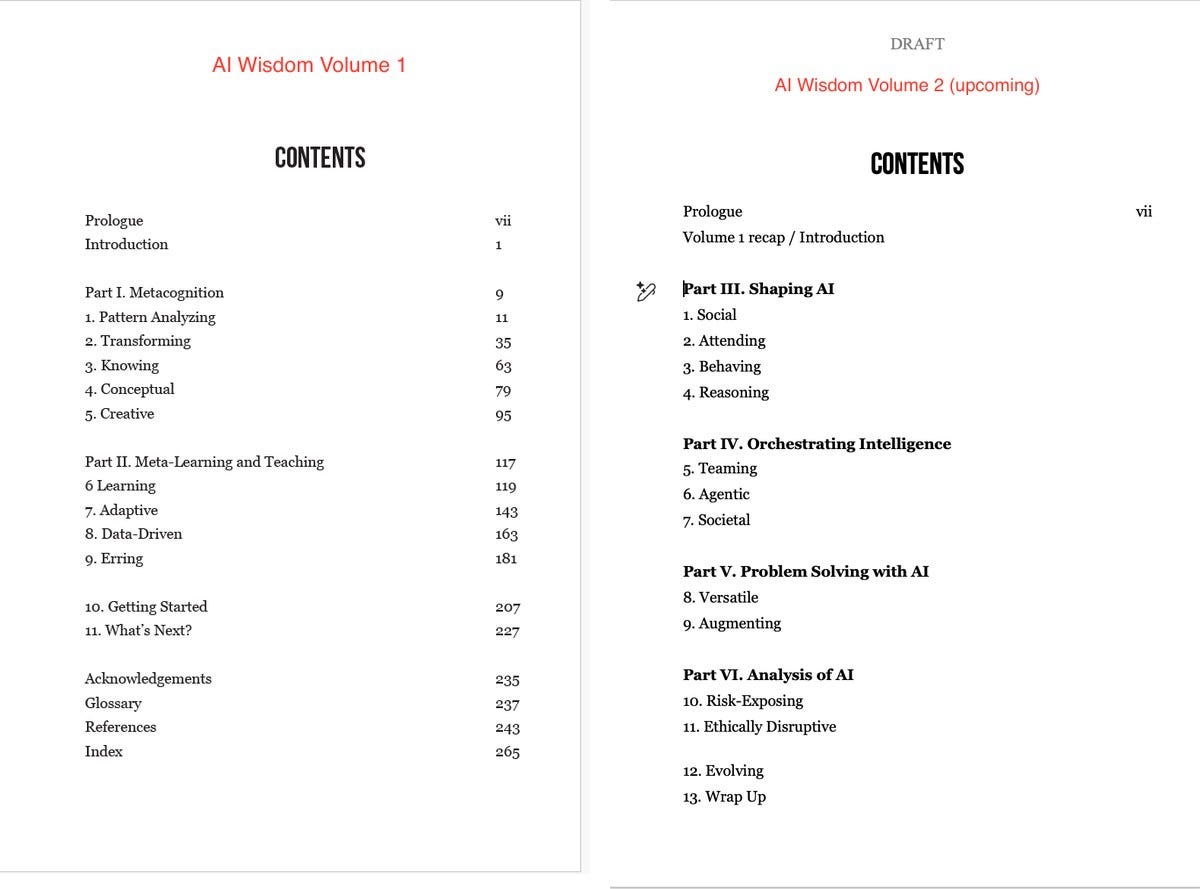

I think I have a way forward, and yet didn’t think I could make it anywhere in the conversation if people don’t know what I’m talking about. So I’ve written AI Wisdom Volume 1:Meta-Principles of Thinking and Learning and am working on Volume 2. The Table of Contents for each are below.

I think the talk I gave at Sequoia Con 2025 in April, days after the book came out, is still the best summary of why the book and its content matter so much, even in a world where AI didn’t demand it. It describes a couple of examples of what the meta-principles of AI are, how those relate to other forms of intelligence (mind, collective, …) and learning, and how those AI roots help us better understand how to judge, create, critique, collaborate, manage, and learn better around other people as well. It’s the video at the beginning of this post.

I grew up technically in a different AI era and a different sub-realm than most others in AI, with my education more closely tied to neuroscience and perceptual and learning psychology than to, say, math. I was the AI guy in graduate school who audited neuroscience medical school classes and studied mammalian systems. So even when sit down with other AI researchers, they are often looking at the field differently than I am. They may understand why the math does what it does, but they may not have had that tied in their minds to the true challenges the math is trying to address.

Those challenges face any intelligence, any learning system. There are many differences between AI thinking and learning and human versions, but at the most fundamental level they’re both trying to make sense of the world, and adapt to its changing conditions.

There are more commonalities than people think, but unfortunately neither how AI or the brain work are taught much. It’s time that changed, because now our technology has more in common with a brain than conventional machines.

©2025 Dasey Consulting LLC

Kudos again. I believe the interweaving of AI, human intelligence, and a radical revamp of ‘skills’ is underway. But here’s the main disruption: We’re going to figure out the brain is a quantum organ that learns through resonance, not a collection of biochemicals that somehow ‘thinks.’ The AI-Human interface will shift.