AI Reasoning Models (3 of 3)

Intelligence from Pattern Analysis and Relationships

In Parts 1 (“The Cognitive Architecture”) and 2 (“Teaching the Key Skills”) of this series, I explored how AI reasoning works through an analogy to the brain’s System 1 and System 2 architectures, and then described the skills that students need to effectively and safely deal with AI reasoning systems. I examined the technical methods that enable AI to break down complex problems and self-evaluate, and described the parallel between management of AI reasoning processes and managing other individuals.

This final installment on AI reasoning is a more abstracted view. An AI with reasoning is using neural networks for pattern analysis, but the patterns are of increasingly abstract nature and the interaction elements increasingly sophisticated–not just neurons but other pattern analyzers and team members that are themselves made of connected neurons.

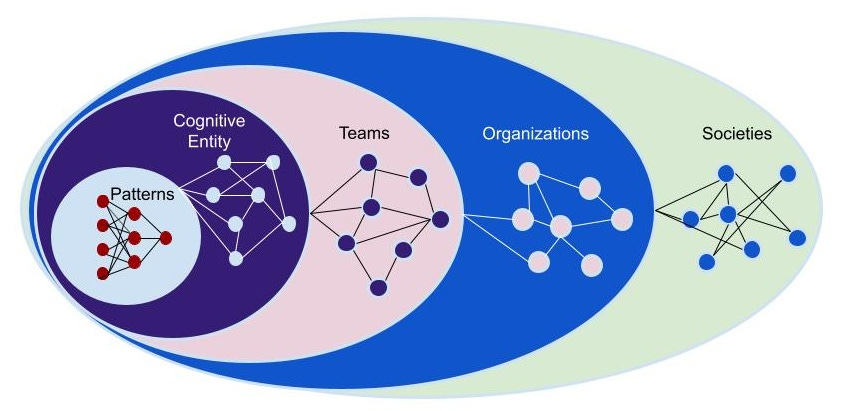

Intelligence is an emergent property of relationships. AI engineers are in increasingly varied ways climbing the sophistication ladder of smarter things being connected in more complex and nuanced ways. Right now AI reasoning systems are using full AIs as collaborating aspects of a cognitive architecture or full teammates, but as the figure shows, there are other future steps on the ladder with the likelihood at each rung of dramatically more capable systems.

Intelligence as Emergent Complexity Across Multiple Scales

Think about intelligence as emerging from relationships across multiple scales. Consider the continuum from neurons firing in patterns to create thoughts, brain regions coordinating to create complex reasoning, and individuals collaborating to create collective intelligence that exceeds what any single person could achieve.

Each level is built from the components below it interacting in intertwined and often complex ways. Brain regions are made up of millions of neurons working together. Teams are made up of individuals, each with their own complex neural networks. At every level, intelligence emerges either from the sheer scale of simple components interacting, or from increasingly sophisticated relationships between already-intelligent components, or both.

AI engineers are essentially playing across this entire spectrum when they design reasoning systems. Some methods operate more like neural networks—millions of simple mathematical operations creating emergent behaviors through massive scale. Others work more like specialized brain regions, where different AI components handle distinct cognitive functions and coordinate their outputs. Still others function like teams of individuals, each contributing different expertise to solve complex problems.

This nested structure is key to understanding why AI reasoning works. Traditional large language models operate primarily at the neural network level through pattern recognition at massive scale—millions of simple connections learning to transform text inputs into appropriate text outputs. When AI systems implement System 1 and System 2 architectures, they're adding a layer: different AI components that transform raw inputs into different types of analysis (quick intuitive responses versus deliberate reasoning), then coordinate those outputs.

Methods like Tree of Thoughts operate like different cognitive processes within a single mind. Multiple reasoning components explore different solution branches simultaneously, with higher-level coordination mechanisms deciding which paths to pursue based on patterns of promise or logical consistency. Self-Consistency Decoding works exactly like a team of independent experts—multiple complete AI systems (each made of millions of neural connections) solve the same problem separately, then a coordination system transforms those multiple outputs into a single consensus answer.

AI engineers can dial the sophistication up or down at each level. They can create simple neural networks that rely on scale alone, or sophisticated multi-agent systems where different AIs (themselves built from millions of simple operations) specialize in different cognitive functions and collaborate through complex coordination patterns. When we go back and forth between different conversations or AIs to get a complex task done, we’re just creating those abstract relationships between parts of process or thinking in a manual way. We’re all AI engineers, using entire AIs as our interaction elements.

Pattern Recognition at Different Levels of Abstraction

What's fascinating—and often missed in discussions of AI reasoning—is that pattern recognition operates at every level of this complexity spectrum, but the types of input-output transformations being learned become increasingly sophisticated and abstract.

At the neural network level, individual connections learn simple patterns: this combination of input signals should produce this output signal. Scaled up across millions of connections, these simple transformations learn complex patterns in text—which words tend to follow other words, which phrases express certain concepts, how to transform a question into an appropriate answer format.

At higher levels of organization, different AI components learn to recognize different types of abstract patterns in their inputs and transform them accordingly. A System 1 component might learn patterns that transform complex questions into plausible-sounding reasoning chains. A System 2 component learns patterns that transform those reasoning chains into assessments of logical validity—taking reasoning steps as input and outputting judgments about consistency, completeness, or logical errors.

For example, a reasoning critic model takes reasoning chains as input and transforms them into quality assessments as output. But the patterns it's learned to recognize are incredibly sophisticated: logical consistency patterns (does premise A actually support conclusion B?), argumentation structure patterns (does this follow a valid reasoning framework?), factual coherence patterns (do these claims align with established knowledge?). The critic has learned to transform complex reasoning into structured evaluations of logical quality.

These aren't simple word associations or statistical correlations. These are sophisticated learned transformations that can take abstract relationships between ideas as input and produce structured assessments of reasoning quality as output—the same kinds of pattern recognition and transformation that emerge when human teams collaborate effectively.

Increasingly, AI systems learn these transformation patterns by studying the inputs and outputs of other AI systems, creating recursive feedback loops of emergent intelligence. Self-consistency methods learn to transform multiple reasoning attempts into consensus judgments by analyzing millions of AI reasoning examples. Constitutional AI learns to transform AI outputs into safety assessments by studying examples that have been flagged by other AI systems.

The selection of training metrics becomes critical because it determines what input-output transformations the system learns to recognize as "good." Train an AI to optimize for human approval ratings and it learns to transform any input into outputs that tell people what they want to hear rather than what's true. Train it to maximize engagement and it learns transformations designed to provoke rather than inform.

But this also opens up intriguing possibilities. The same basic pattern recognition architecture could be trained on datasets that reward different types of transformations—learning to transform problems into creative leaps rather than logical steps, or learning to transform ideas into feasibility assessments rather than supportive arguments, creating artificial cognitive diversity through different specialized transformation patterns.

Beyond "Stochastic Parrots"

Many dismiss AI as "stochastic parrots" or claim it's "just pattern matching." Just? Yes, AI systems recognize patterns and perform transformations—but so do human brains, human teams, and human institutions. The question isn't whether pattern recognition is happening, but at what level of abstraction and sophistication.

The dismissive phrase "just pattern matching" reflects a category error. It's like saying human intelligence is "just neurons firing" or that a symphony orchestra is "just people making noise." The statement is technically accurate but misses the emergent complexity that arises from the nested interactions between components at multiple scales.

Intelligence—whether artificial or biological—emerges from relationships, and those relationships can be sophisticated in three key ways: through sheer scale (millions of simple components), through the intelligence of individual components being related (smart neurons, smart team members), or through the sophistication of the coordination mechanisms themselves (complex rules for how components interact and influence each other).

Understanding AI reasoning as emergent intelligence across multiple scales of complexity has profound implications for how educators teach and students learn. If intelligence emerges from relationships at multiple nested levels, teachers should help students think in terms of systems and interactions, not just individual components. This means developing skills in understanding how simple rules can create complex behaviors, recognizing patterns at different scales of abstraction, and designing effective collaboration and coordination mechanisms.

Instead of competing with AI's pattern recognition at any single level, educators should teach students to work with AI systems across the entire complexity spectrum. Students need to understand which level of AI complexity matches which type of problem, and how the nested structure means that even "simple" AI tasks involve millions of underlying pattern recognition operations.

There are types of emergent intelligence that humans excel at creating that current AI systems struggle with. Emotional and empathetic coordination requires shared lived experience that transforms interpersonal inputs into appropriate social outputs. Creative collaboration demands intuitive leaps and cultural understanding that can transform abstract ideas into culturally resonant innovations. Ethical reasoning needs human values and moral intuition to transform complex situations into principled judgments. But it’s important that people realize that AI is capable of each of these aspects if the relevant information such as lived experiences were available for training. AI can’t currently experience the world the way we do, but that gap will likely narrow.

Perhaps most importantly, students need to understand that the emergent properties of intelligent systems—whether human teams or AI architectures—depend critically on how the relationships and interactions are structured at every level of the nested hierarchy.

The pattern recognition foundation of AI reasoning isn't a limitation—it's a powerful capability that operates across multiple scales of complexity, from simple neural interactions to sophisticated team collaboration. Rather than dismissing AI as "just pattern matching," educators should ask: At what scale of complexity should artificial intelligence be organized for different tasks? How can AI systems leverage the strengths of massive scale, intelligent components, and sophisticated coordination mechanisms simultaneously?

Intelligence comes from relationships—but those relationships exist at multiple nested levels, from simple connections scaled up to massive networks, to intelligent components coordinating through sophisticated rules. AI engineers are learning to design those relationships across scales never seen before. Educators need to prepare students for a world where intelligence emerges not just from individual minds, but from the sophisticated coordination between human and artificial intelligence systems working together across multiple levels of complexity.

This concludes the three-part exploration of AI reasoning models in education. As these systems continue to evolve across multiple scales of complexity, the fundamental insight remains: understanding AI reasoning as emergent intelligence arising from designed relationships at multiple nested levels—not just pattern matching—is essential for students. The added benefit is they also learn about relationships and institutions comprised of people that demonstrate intelligence behavior.

©2025 Dasey Consulting LLC. All Rights Reserved.