In Part 1, “Cognitive Architectures,” I explored how AI reasoning works through the lens of System 1 and System 2 thinking, examining the technical methods that enable AI to break down complex problems, self-evaluate, and improve their outputs. I described how test-time compute allows AI to spend more processing power on thinking through difficult questions, leading to dramatic performance improvements but also new challenges in managing these powerful tools.

Now I turn to the practical question: What skills do students need to work effectively with reasoning AI? The answer involves recognizing when tasks have hidden complexity and learning to direct AI reasoning processes effectively.

The trickiest part about working with reasoning AI is that many seemingly simple tasks have hidden complexity. Take "Should I switch to a different major?" This appears like a personal decision, but underneath lie questions about job market trends, personal interests that might evolve, financial implications, family expectations, and opportunity costs that interconnect in complex ways.

Students need to develop intuition for spotting hidden complexity. Some clues that reasoning might help include that the task involves multiple interdependent factors, the stakes are high if you get it wrong, there are trade-offs to consider, or you need to validate information from multiple perspectives. Understanding when these capabilities are needed—and when they're overkill—becomes critical for energy conservation too, since reasoning models can use 10-100 times more computational resources than simple responses.

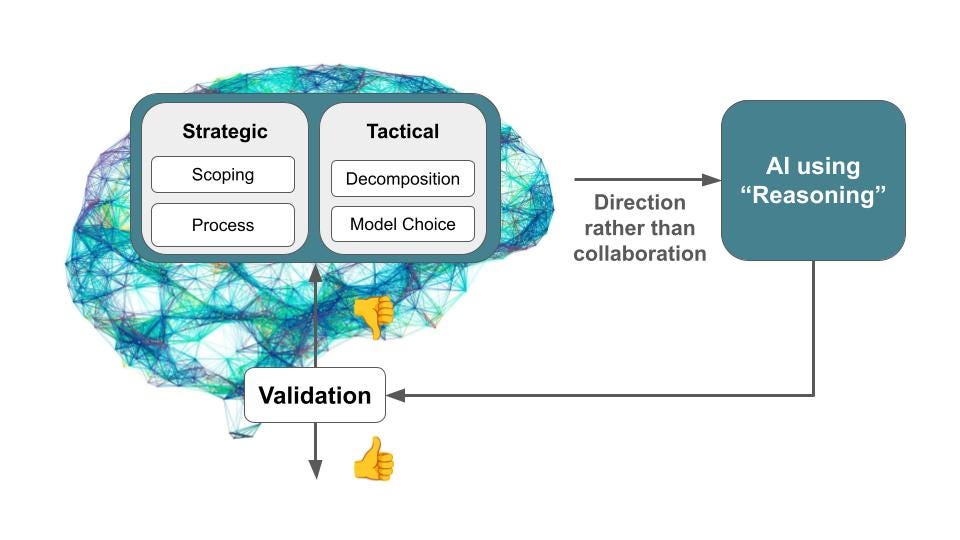

Once students can recognize when reasoning is needed, they need to learn how to manage it effectively. Managing an AI's reasoning process requires moving from being a conversational partner to being a cognitive director. This managerial role has five core skills that students must master.

1. Scoping and Goal Setting

This is the most important and most frequently botched step. The challenge is that our initial goals are often proxies for what we really want. "Help me decide if I should move to a different city" sounds clear, but what you really want might be "Help me figure out how to build a life that feels more exciting and connected." The AI might optimize for job opportunities and cost of living when you actually care about community and personal growth.

AI will dutifully optimize for whatever goal you give it. If you say "find the city with the best job market," it might reason through employment statistics while ignoring quality of life factors that matter more to you. If you say "find the most exciting city," it might focus on nightlife and entertainment when you care more about outdoor activities and cultural diversity. The AI's reasoning process needs to consider your values, skills, and personal definition of impact—not just external markers of success.

The skill is learning to iterate on goal definition. Start with your surface goal, then ask: "If the AI achieved this perfectly, would I actually be satisfied?" Often the answer reveals what you really care about. As these skills progress students can learn to spot potential issues before they arise, like that if we forget to tell the AI what resources we have available to solve a challenge it might assume we have all the time and money in the world.

Teaching Goal Clarification: Have students practice translating vague wants into specific, actionable goals. Take "I want to be more social" and work toward "I want to build closer friendships with people who share my interests and feel comfortable in group settings."

Classroom Activity: Give students proxy goals like "I want to eat healthier" or "I want to be more organized" and have them dig deeper into what those goals are really trying to achieve. What would "eating healthier" actually do for you—more energy, better appearance, reduced anxiety about food choices–and are those the true goals that might reveal additional solution paths? This builds the skill of goal clarification whether they're directing AI or making their own decisions.

2. Process Steering

This is where students learn to direct thinking processes, not just request outputs. The key insight is that some tasks benefit from explicit reasoning frameworks while others don't.

Compare these approaches to the same underlying question about choosing a living situation:

Simple version: "Should I live on campus or off campus next year?"

Reasoning version: "Help me decide between on-campus and off-campus housing by first analyzing the financial implications including rent, utilities, meal plans, and transportation costs. Then consider the social factors like community building and independence. Then evaluate practical issues like commute time and study environment. Finally, weigh these against my priorities for academic success and personal development."

The second version activates the AI's reasoning capabilities by explicitly requesting a structured thinking process. You're not just asking for pros and cons—you're directing how to think through the problem systematically.

Students should learn to specify reasoning frameworks: "Use decision theory to evaluate these options," or "Apply stakeholder analysis to understand this conflict," or "Think through the long-term consequences of each choice." The framework shapes how the AI approaches the problem.

Teaching Process Direction: Students need to learn common reasoning frameworks and when to apply them. Systems thinking for understanding complex problems. Stakeholder analysis for navigating conflicts. Risk assessment for evaluating uncertain outcomes. Ethical frameworks for moral dilemmas.

Classroom Activity: Have students practice directing each other's thinking processes. One student gives another a dilemma (like "Should our school ban phones during class?") and specific reasoning instructions ("Consider multiple stakeholder perspectives, think about unintended consequences, and evaluate different implementation approaches"). This builds metacognitive skills that transfer to AI direction.

3. Problem Decomposition and Checkpoints

The difference between simple and complex tasks often comes down to how many steps are involved and whether errors or assumptions can compound. "What's the weather this weekend?" is single-step. "Should our family get a dog?" involves considerations about lifestyle, finances, time commitments, and family dynamics where assumptions in early steps affect everything that follows.

This builds on computational thinking skills, but with a crucial difference: the modules in AI reasoning can handle "squishy" transformations like weighing emotional factors, analyzing relationship dynamics, or evaluating cultural fit—not just the code-like logical operations students might know from programming.

Here's where strategic checkpoint placement becomes crucial. You need to identify decision points where the next steps are important enough that you want to intervene and shape what comes next. For the family dog decision:

What are our family's current time commitments and schedules?

What would be the monthly and annual costs of dog ownership?

Checkpoint: Do these time and money requirements fit our actual situation?

What type of dog would match our living situation and activity level?

How would a dog affect our family dynamics and responsibilities?

Considering all factors, does getting a dog align with our family's goals and capacity?

The checkpoint at step 3 lets you course-correct if the initial analysis doesn't match your family's reality before the AI builds recommendations on faulty assumptions about your time or budget.

Teaching Decomposition Skills: Students need to recognize when problems have sequential dependencies and where assumptions might cascade. They also need to identify strategic intervention points—where their input can most effectively shape the reasoning process.

Classroom Activity: Create scenarios where early assumptions dramatically affect later conclusions. Have students work through "Should our school switch to a four-day week?" in sequence, showing how assumptions about student transportation or family work schedules in early steps shape everything that follows. Practice identifying the best checkpoints for validating assumptions before they compound.

4. Model Selection

Students need to learn when to use reasoning AI versus regular AI versus prompting regular AI to think through problems explicitly. This isn't just about efficiency—it's about matching tool to task and managing computational resources responsibly.

For simple factual questions like "What are the requirements for in-state tuition?" you don't need reasoning capabilities. For complex decisions like "Should I double-major or focus on internships?" you want the AI to think through multiple factors and their interactions systematically.

But there's a middle ground: tasks that could benefit from reasoning but don't require the full computational expense. "What scholarships am I eligible for?" becomes more valuable when you prompt a regular AI to "Check eligibility requirements carefully and explain any borderline cases where I might or might not qualify." You're getting analytical thinking without the full reasoning model cost.

The skill is learning to recognize three categories:

Simple tasks: Factual lookups, basic definitions, procedural how-to questions

Complex tasks: Multi-stakeholder decisions, problems involving personal values, situations where cultural or emotional factors matter

Improvable tasks: Simple questions that become more valuable with additional analysis or verification

Teaching Model Selection: Present students with scenarios where the same basic question could be simple or complex depending on context. "Where should I go to college?" is simple if you just want a list of schools, complex if you need to weigh academic fit, financial aid, family expectations, and career goals.

Classroom Activity: Give students the same underlying question framed different ways and have them identify which approach matches different user needs. This builds judgment about when reasoning capabilities are worth activating.

5. Validation, Debugging, and Iteration

Whether the AI used reasoning or not, the student is ultimately responsible for the output. But validating reasoning AI outputs requires different skills than fact-checking simple responses, and sometimes the reasoning process needs course correction mid-stream.

For simple tasks like "What are the application deadlines for graduate school?" validation means checking if the dates are current and complete. For reasoning tasks like "Should I go to graduate school or start working?" validation means checking whether the AI's logic makes sense, whether it considered factors important to your specific situation, and whether its assumptions about your priorities are accurate.

Students need frameworks for evaluating reasoning quality:

Logic check: Do the conclusions follow from the premises?

Completeness check: Did it consider factors that matter to your specific situation?

Assumption check: Are the underlying assumptions about your values and circumstances reasonable?

Perspective check: Did it consider viewpoints and consequences you care about?

Beyond final validation, students need debugging skills. When reasoning goes off track—maybe it's overweighting financial factors when you care more about personal fulfillment, or focusing on short-term considerations when you're thinking long-term—they should know how to redirect it, ask for alternative approaches, or even use multiple AIs to cross-check each other's reasoning.

Teaching Validation and Debugging: Students need practice spotting flawed reasoning in low-stakes contexts before they encounter it in important decisions. This includes recognizing when reasoning sounds sophisticated but misses crucial factors, when cultural assumptions are embedded in the logic, or when the reasoning process needs to be restarted with different priorities.

Classroom Activity: Give students examples of reasoning outputs (both AI and human) with deliberate issues—missing perspectives, cultural blind spots, unstated value judgments. Have them develop approaches for spotting these problems and practice redirecting the reasoning process when it goes astray.

Building these skills develops through experience with decisions that have real stakes for students. The specific tasks matter less than ensuring students can evaluate whether the reasoning process makes sense for problems they understand. Examples might include personal decisions about academic and career paths, community issues that affect their daily lives, or family choices they're involved in making.

Begin with recognition and goal-setting skills using problems students find meaningful. Before introducing any AI tools, build their ability to spot hidden complexity, clarify their actual goals, and structure thinking processes. Once they understand these processes from the inside, they're much better equipped to direct AI systems that use similar principles.

What I’ve described could easily be taught without ever mentioning AI since most of the skills are similarly applied to human and machine delegation. But AI management has distinct characteristics from managing people, and creating those contrasts is very important in building AI versus human interaction intuitions.

We are training students for a world where AI reasoning capabilities will only grow more sophisticated. The question isn't whether they'll work with reasoning AI—it's whether they'll recognize when reasoning is needed and direct it skillfully. In Part 3 of this series, I'll describe how even the reasoning features of Generative AIs do pattern analysis.

©2025 Dasey Consulting LLC. All Rights Reserved.