There's a genuine, understandable concern bubbling up about the carbon footprint of training and using massive AI models. And while concern for our planet and resource consumption is always valid and necessary, I worry the intense focus currently directed at AI's energy use is missing the forest for the trees—or perhaps, missing the complex global climate system for the individual server rack.

Considering the full spectrum of AI's potential impacts, the level of attention devoted specifically to its energy consumption feels disproportionate compared to other urgent societal and ethical AI challenges, especially when weighed against AI's potential to contribute to climate solutions.

This isn't to dismiss the energy usage; it's real and requires responsible management. But fixating primarily on the kilowatt-hours of a GenAI query obscures the bigger picture. It risks leading to trivial interventions ("don't use AI for that silly image idea") while distracting from more systemic issues and potentially more impactful actions. People can fret about the fractional energy cost of an AI query, yet often overlook the massive, ongoing energy demands of other digital mainstays like video streaming and social media which currently dwarf AI's overall footprint.

The Tangled Web of AI Climate Impacts

The narrative often goes like this: AI models are huge, training takes energy, running them takes energy, therefore AI = bad for the climate. It's simple, digestible, and makes for a neat discussion point. But it's dangerously incomplete. AI's relationship with energy and climate is complex, a tangled web of pushes and pulls.

For some AI tasking, a comparison with the energy footprint of the human tasks it might replace or augment seems appropriate. A human help desk also uses PCs, servers, lights, and HVAC. An AI might streamline that, even if it adds its own energy draw. The net change is what matters. (To be clear, my goal isn't fewer human workers, but understanding these energy trade-offs is crucial, even if to make the point that optimizing purely for energy can create other distortions.)

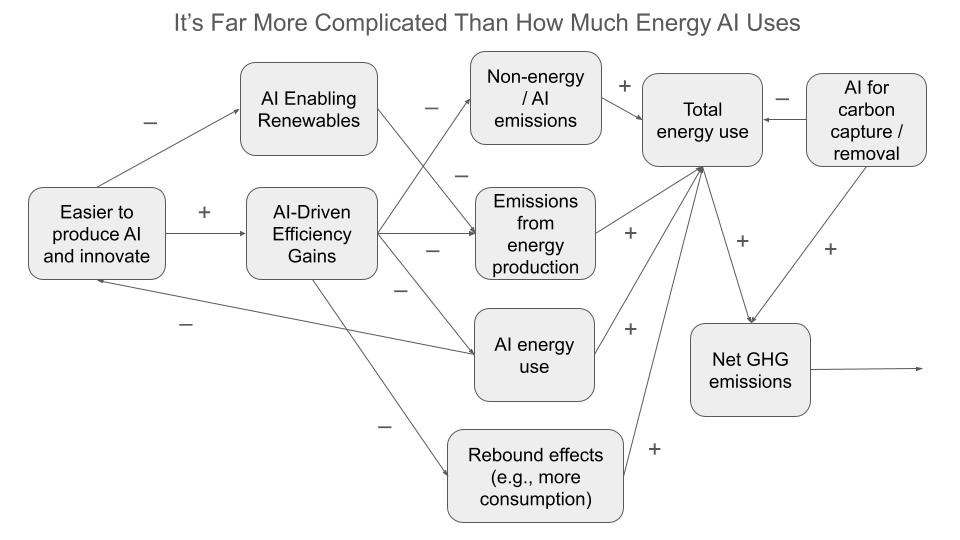

Beyond the comparison with human work, the effect of AI on the climate has many aspects that change a complex system, as in the figure that began this article. The net influence at the output (“Net GHG [Greenhouse Gas] Emissions”) has many factors with AI influence.

One reality is that AI isn't just consuming energy; it's increasingly involved in managing it across entire systems, and inventing new solutions:

Tuning Existing Infrastructure: AI algorithms are being deployed to optimize operations in energy-intensive sectors, like improving the combustion efficiency in power plants or improving factory efficiency.

Unlocking Renewable Potential: Integrating variable sources like wind and solar into the grid is a major challenge. AI is proving essential for improving energy forecasting, optimizing battery storage, and managing grid stability, enabling a higher percentage of renewables.

Driving System-Wide Efficiency: Beyond generation, AI identifies energy savings across complex systems. This includes optimizing energy management of buildings, reducing fuel consumption through smarter logistics and traffic control, and minimizing waste in industrial processes and supply chains. Companies are increasingly leveraging AI for significant efficiency gains in data center operations, a major energy consumer itself.

Accelerating Climate Science & Solutions: AI significantly speeds up climate modeling and environmental monitoring. Perhaps most critically, AI is drastically accelerating the research and development cycle for green technologies. AI helps researchers find promising solutions orders of magnitude faster than traditional methods.

In aggregate, the World Economic Forum estimates a 3-6 gross tons of CO2 equivalent per year (GtCO2eq/y) reduction in emissions as a result of AI in the power, meat and dairy, and light road transport industries, while adding 0.4-1.6 GtCO2eq/y because of AI energy draw.

A common retort is that these are applications of specialized AI, not the Generative AI that grabs headlines and enters classrooms. But this distinction is becoming increasingly blurred and often misses the bigger picture. Firstly, GenAI tools are already being used to accelerate the development of these specialized models and analysis techniques. Secondly, GenAI itself, with its ability to process vast amounts of unstructured data and adapt to context, is finding direct applications in complex system modeling, scenario planning, and even optimizing the control strategies for physical systems, effectively making specialized AI more powerful and adaptable.

Of course, there are potential rebound effects. If AI makes something cheap and easy (like video translation or scientific simulation), we might do more of it, adding new compute loads that offset some savings. This is real, but it's part of the system, not the whole story.

Focusing only on the direct energy draw ignores all the potential benefits. Teaching students to simply "use less AI" because it uses some energy is like telling them to solve traffic congestion by not driving, without considering public transport, smarter traffic lights, or remote work. It’s a simplistic answer to a complex systems problem, and it doesn't equip them with the critical thinking needed to navigate the real trade-offs.

Where We Should Focus

The intense focus on AI energy demand feels overemphasized relative to other AI issues. AI companies have a massive, built-in financial incentive to reduce the energy consumption of their models. Electricity is a huge operational cost. Every efficiency gain directly boosts their bottom line. While corporate motivations often invite skepticism, and incentives alone are not sufficient—continued pressure for transparency, clean energy usage, and accountability through policy is absolutely essential—the fundamental direction of this economic incentive pushes towards energy reduction. This alignment is less clear in other critical areas of AI impact.

Compounding this is the incredible, often overlooked, pace of innovation, both in AI's own efficiency and in its potential to drive climate solutions. Discussions about future impacts frequently extrapolate from today's technology, effectively giving zero credit to future improvements. On the efficiency front, the energy required to train state-of-the-art models and perform inference tasks has plummeted orders of magnitude in just the past three years. Simultaneously, AI is accelerating the pace of discovery in areas crucial for climate action. Future gains aren't guaranteed, of course, but discounting the high probability of continued, AI-accelerated innovation across both efficiency and climate solutions seems unwise when projecting net future impacts.

But AI leaders are clearly worrying about energy constraints. Shouldn’t we listen to them? This narrative also requires a systems view. Much of this concern, particularly in the US, stems not just from the total anticipated energy use, but from the concentration of that demand in specific locations due to efforts to onshore data centers and the limitations of our current electrical grid infrastructure. Adding a large data center can strain local infrastructure. So, industry calls for more power often reflect needing reliable, concentrated power in specific places to overcome infrastructure bottlenecks, rather than solely a statement about an unmanageable global increase in net energy consumption. Understanding this distinction helps educators contextualize these statements. The problem highlighted is often the model of our existing infrastructure as much as total energy demand.

This context is crucial. It shifts the focus from simply "AI uses too much energy" to a more complex discussion about infrastructure planning, grid modernization, and the geographical distribution of computing resources.

The AI energy demand aspect shouldn't overshadow the other critical issues surrounding AI where incentives aren't so neatly aligned with societal good, and which fall much more directly into educators' sphere of influence (e.g., potential cognitive offloading, pedagogical adaptation). Attention is a finite resource. Focusing on the energy use of AI queries may not be impactful. Perhaps advocating for policies that dedicate significant GPU compute resources specifically to climate modeling and innovation would leverage AI's strengths more effectively for the climate cause than simply decrying the energy cost of generating an image.

When the energy argument falters, the conversation often pivots to water consumption in data centers. It's true that traditional evaporative cooling methods in data centers use a lot of water. But again, this overlooks the potential for AI to be part of the solution. Highly efficient immersion cooling techniques exist but have been hampered by maintenance difficulties. AI-powered robotics could potentially automate this maintenance, making widespread adoption feasible and dramatically reducing water usage, another example where AI could help mitigate its own perceived environmental costs.

The idea that we should unduly restrict or slow down the exploration of AI's potential solely because of its energy footprint feels shortsighted. Climate change is perhaps the most complex, multifaceted challenge humanity has ever faced. Frankly, I don't see how we solve it without AI. We need AI to model the complexities, find novel solutions, understand behavior change, and optimize adaptation. Asking educators and students to view AI primarily as a climate problem risks blinding them to its potential as a critical climate solution.

Let's have more nuanced conversations. Let's push for policies that harness AI for climate action. Let's teach students to analyze the entire system of AI's impact, weighing the costs against the benefits and the potential for innovation.

Let's Get Real About AI and Climate

The energy consumption of AI is real, and responsible management, ideally powered by clean energy, is essential. But the level of attention currently focused on it, particularly in educational contexts, is disproportionate to other major AI concerns. It risks presenting a simplistic view of a complex systems issue, potentially leading to feel-good but ultimately unimpactful actions.

Let's broaden the discussion. Let's talk about the net impact, the systems-level effects, and the incredible potential alongside the risks. Let's equip students with the tools to analyze complexity, not just react to headlines. AI's relationship with our planet is far more intricate and interesting than just a line item on an electricity bill.

©2025 Dasey Consulting LLC